Samsung puts processing-in-memory chip onto AMD MI100 GPU • The Register

Samsung has built a claimed first-of-its-kind supercomputer containing AMD datacenter GPUs affixed with its processing-in-memory chips, which the company said can significantly improve the performance and energy efficiency of training large AI models.

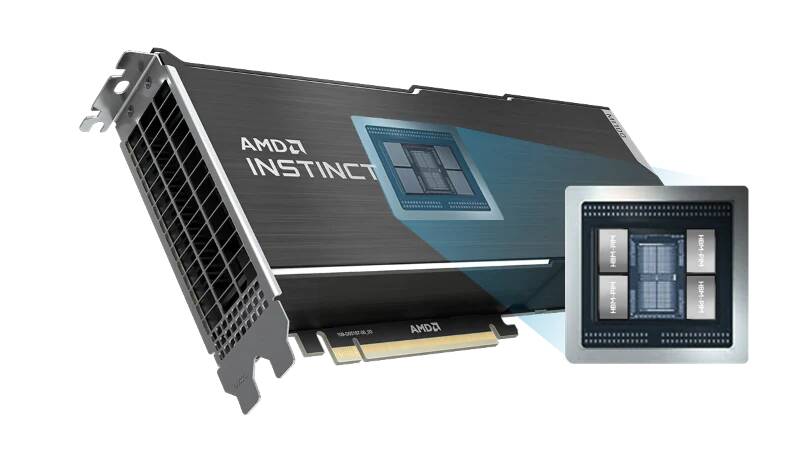

The supercomputer, disclosed Tuesday at an industry event in South Korea, includes 96 AMD Instinct MI100 GPUs, each of which are loaded with a processing-in-memory (PIM) chip, a new kind of memory technology that reduces the amount of data that needs to move between the CPU and DRAM.

Choi Chang-kyu, the head of the AI Research Center at Samsung Electronics Advanced Institute of Technology, reportedly said the cluster was able to train the Text-to-Test Transfer Transformer (T5) language model developed by Google 2.5 times faster while using 2.7 times less power compared to the same cluster configuration that didn’t use the PIM chips.

“It is the only one of its kind in the world,” Choi said.

Samsung has said that its PIM tech has major implications for energy consumption and the environment, reducing annual energy use of a cluster by 2,100 Gigawatt hours and, consequently, cutting down 960,000 tons of carbon emissions.

As always, we should reserve judgement until these claims can be tested and verified independently, but the company said such a reduction in power is equal to the amount of carbon it would take 16 billion urban trees to absorb over a decade.

One big reason why the PIM-powered supercomputer has so much horsepower is that each PIM chip uses high-bandwidth memory (HBM), which the industry is increasingly turning to for handling high-performance computing and AI workloads. Nvidia and AMD have used HBM in datacenter GPUs for multiple generations now, and Intel plans to introduce HBM in a forthcoming variant of server processors branded Xeon Max and a high-end datacenter GPU.

What makes Samsung HBM-PIM chips different from HBM implementations by other companies is that each memory bank on the PIM chip includes a processing unit inside. This, according to the South Korean electronics giant, reduces bottlenecks associated with moving data between the CPU and memory by shifting some of the computation inside the memory itself.

Samsung hopes to spur adoption of its PIM chips in the industry by creating software that will allow organizations to use the tech in an integrated software environment. To do this, it’s relying on SYCL, a royalty-free, cross-architecture programming abstraction layer that happens to underpin Intel’s implementation of C++ for its oneAPI parallel programming model.

The company has been hyping up PIM for nearly three years now, and one other way it plans to take the tech to market is through what it has called the AXDIMM, short for accelerated DIMM.

We’ll know if Samsung ends up making any inroads if PIM starts appearing in new supercomputers set up by research labs, academic institutions, and other organizations over the next few years. ®