New Epyc, Ryzen, Instinct chips through 2024 • The Register

[ad_1]

After taking serious CPU market share from Intel over the last few years, AMD has revealed larger ambitions in AI, datacenters and other areas with an expanded roadmap of CPUs, GPUs and other kinds of chips for the near future.

These ambitions were laid out at AMD’s Financial Analyst Day 2022 event on Thursday, where it signaled intentions to become a tougher competitor for Intel, Nvidia and other chip companies with a renewed focus on building better and faster chips for servers and other devices, becoming a bigger player in AI, enabling applications with improved software, and making more custom silicon.

“These are where we think we can win in terms of differentiation,” AMD CEO Lisa Su said in opening remarks at the event. “It’s about compute technology leadership. It’s about expanding datacenter leadership. It’s about expanding our AI footprint. It’s expanding our software capability. And then it’s really bringing together a broader custom solutions effort because we think this is a growth area going forward.”

At the event, AMD revealed new roadmaps for its Epyc server chips and Ryzen client chips as well as plans to introduce a new kind of hybrid CPU-GPU chip for datacenters and the aim of integrating AI engines from its Xilinx acquisition into multiple products in the future.

Signaling that it has a way to catch up with Nvidia on the AI computing front, the company also announced plans for a unified software interface for programming AI applications on different kinds of chips – somewhat akin to Intel’s OneAPI toolkits.

The chip designer is keen on expanding its custom chip business beyond the video game console space into new areas like hyperscale datacenters, automotive and 5G.

“We already have a very broad high-performance portfolio. We already have the leading industry platform for chiplets. But what we’re doing is we’re going to make it much easier to add third-party IP as well as customer IP to that chiplet platform,” Su said.

Big boost promised for Zen 4, AI-optimized Zen 5 coming in 2024

AMD shared more details about what to expect with upcoming chips using its Zen 4 CPU core architecture, which includes the Ryzen 7000 desktop chips and Epyc Genoa server chips coming later this year.

It also teased the next-generation Zen 5 architecture that will arrive in 2024 with integrated AI, and machine learning optimizations along with enhanced performance and efficiency.

First previewed last fall, AMD claimed Zen 4 will be the first high-performance x86 architecture to use a 5nm manufacturing process and promised an 8–10 percent increase in instructions per clock (IPC) over Zen 3 – the main way chip designers measure performance for new architectures. By comparison Zen 3, which debuted in 2020, provided a larger IPC increase of roughly 19 percent over Zen 2.

While the IPC gain may be a little modest, AMD said we should expect a “significant generational performance-per-watt and frequency improvement” along with a more than 15 percent boost in single-threaded performance and up to 125 percent more memory bandwidth per core. Zen 4 chips will also come with instruction set extensions for AI and AVX-512.

To illustrate what those “significant” improvements will look like, AMD said a Zen 4 desktop CPU with 16 cores – presumably from the Ryzen 7000 lineup – will provide more than a 25 percent boost in performance-per-watt and a greater than 35 percent bump in overall performance over Zen 3.

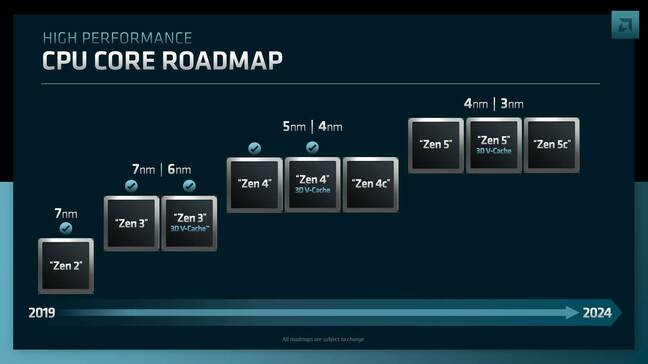

In an updated Zen roadmap, AMD disclosed that Zen 4 will use both 5nm and 4nm process nodes for different products and that there will be a version of Zen 4 that uses its vertical cache technology, which AMD debuted with its Ryzen 7 5800X3D desktop chip and its Epyc Milan-X server chips earlier this year. This is in addition to the Zen 4c architecture AMD is using for its cloud-optimized Epyc Bergamo chips.

Starting in 2024, the situation for Zen 5 will be similar. There will be a vertical cache variant along with a Zen 5c variant for cloud-optimized chips.

Epyc Genoa in Q4, with new specialty server chips in 2023

After first teasing the Epyc chips last fall, AMD said it’s on track to launch Genoa, its next generation of general-purpose server CPUs, in the fourth quarter. The company will follow that with Bergamo, its first lineup of cloud-optimized server CPUs, in the first half of 2023.

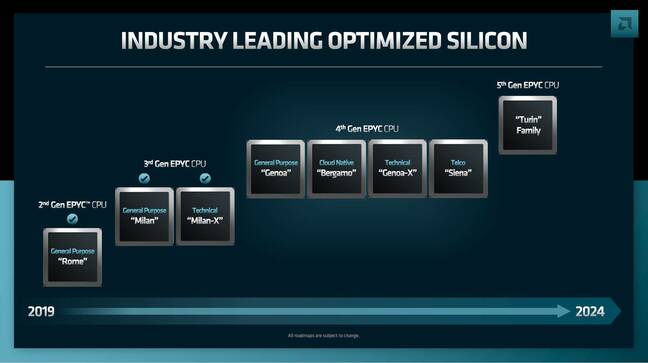

Starting with the third-generation Epyc chips that debuted in 2021, AMD has started to branch out different versions of Epyc that serve different product areas. For third-gen Epyc, the product groups were divided between general-purpose chips, collectively known under the code name Milan, and processors optimized for technical computing, known as Milan-X. With the upcoming Zen 4c-powered Bergamo chips, Epyc is expanding, again, to cloud-optimized models.

With the general-purpose Genoa chips coming later this year, AMD is promising to deliver “leadership socket and per-core performance” with up to 96 Zen 4 cores as well as “leadership memory bandwidth and capacity” with up to 12 channels of DDR5 memory. As a teaser, the chip designer said the top Genoa chip will provide more than 75 percent faster Java performance than its top third-gen Epyc chip.

Genoa chips will come with PCIe 5 connectivity and memory expansion capabilities with Connect Express Link (CXL). AMD said we should also expect to see advances in confidential computing features with Genoa, and this includes things like memory encryption as well as CXL-related capabilities.

As for Bergamo, AMD is promising “cloud-native leadership” and teased that the top chip in the line will provide double the cloud container density versus its top third-gen Epyc chip. This gain is being driven by the fact that Bergamo chips will feature up to 128 Zen 4c cores and up to 256 threads while supporting 12 channels of memory and PCIe 5.

AMD said Bergamo will be compatible with Genoa’s SP5 server platform and will not require the rewriting of any code for applications, as it is compatible with the Zen 4 instruction set.

With the general-purpose Genoa chips and cloud-optimized Bergamo chips coming, AMD revealed it will extend the Zen 4 architecture to two other sets of Epyc chips. The first – Genoa-X – will serve as the successor to Milan-X, and will target technical computing and database applications with up to 96 cores and a massive L3 cache of more than 1GB per socket.

The second – Siena – represents two new focus areas for Epyc chips: the intelligent edge, and telecommunications. It will come with up to 64 cores in a “lower cost platform” as well as optimized performance-per-watt.

As for what comes in 2024, AMD teased a fifth generation of Epyc that will carry the code name Turin.

Instinct MI300 to combine Epyc CPU, CDNA 3 GPU

Beyond CPUs in the datacenter, AMD is signaling bolder ambitions to compete with Nvidia and Intel in the accelerator space, thanks to its newly disclosed Instinct MI300 chip that will mix a Zen 4-based Epyc CPU with a GPU that uses its fresh CDNA 3 architecture.

The chip designer is calling the Instinct MI300, which will arrive in 2023, the “world’s first datacenter APU” – referring to the “accelerated processing unit” nomenclature AMD has traditionally used for client CPUs that come with integrated graphics.

This means that in the next two years, AMD, Nvidia and Intel will all have hybrid CPU-GPU chips entering the datacenter market. Nvidia’s Grace Hopper Superchip is due early next year and Intel’s Falcon Shores XPU will be coming in 2024.

AMD is claiming that the Instinct MI300 is expected to deliver a greater than 8x boost in AI training performance over its Instinct MI250X, which launched last fall as part of a set of datacenter GPUs that are more competitive against Nvidia’s flagship A100 than previous attempts. It’s also promising “leadership memory bandwidth and application latency.”

Compared to the CDNA 2 GPU architecture that powers the Instinct MI200 series, AMD said the CDNA 3 architecture being used for the Instinct MI300 will provide more than a 5x uplift in performance-per-watt for AI workloads. This will be made possible by using a 5nm process, 3D chiplet packaging, a unified memory architecture that will allow the CPU and GPU to share memory, plus new generations of AMD’s Infinity Cache and Infinity Architecture.

AMD said the Instinct MI300 will use “groundbreaking” 3D packaging for the CPU, GPU, cache and high-bandwidth memory. Plus, the architecture’s design will allow the APU to use lower power compared to implementations that use discrete CPUs and GPUs.

AMD plans to use Xilinx’s AI engine for multiple products

It’s been a few months since AMD completed its $49 billion acquisition of FPGA designer Xilinx, and after teasing some integration plans last month, the company is providing more details of how it plans to use Xilinx’s AI engine and FPGA fabric technologies in multiple products.

Moving forward, the AI engine and FPGA fabric from Xilinx will be known as “adaptive architecture” building blocks under the name XDNA.

The AI engine is built with a so-called “dataflow architecture” that makes it well-suited for AI and signal processing applications that need a mix of high performance and energy efficiency.

The FPGA fabric, on the other hand, serves as an adaptive interconnect that comes with FPGA logic and local memory.

After teasing plans in May, AMD said it plans to use the AI engine in future Ryzen processors, which includes two future generations of laptop CPUs coming over the next few years. The company also teased that it will use the AI engine in future Epyc CPUs.

AMD promises Unified AI Stack software

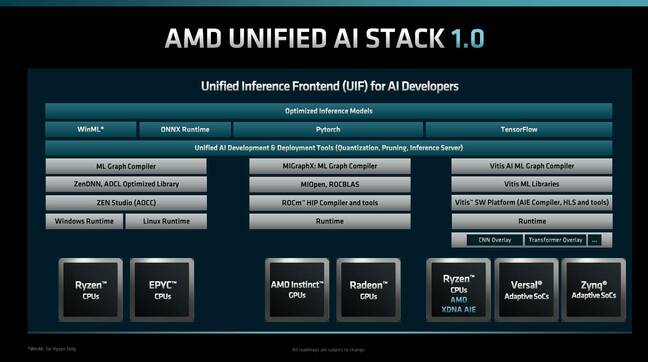

To help it capitalize on larger ambitions in AI computing, AMD promised that it will unify previously disparate software stacks for CPUs, GPUs and adaptive chips from Xilinx into one, with the goal of giving developers a single interface to program across different kinds of chips.

The effort will be called the Unified AI Stack, and the first version will bring together AMD’s ROCm software for GPU programming, its CPU software and Xilinx’s Vitis AI software.

AMD said this will give developers access to optimized inference models and the ability to use popular AI frameworks like PyTorch and TensorFlow across its wider portfolio of chips.

“We’re going to unify even more of the middleware. We’re going to have commonality in terms of our ML graph compiler, have much more commonality in our library APIs. And by the way, we’re definitely also going to roll out a lot more pre-optimized models for these targets,” said Victor Peng, Xilinx’s former CEO who is now head of AMD’s Adaptive and Embedded Computing Group.

New Ryzen CPUs, plus RDNA 3-based Radeon GPUs coming

In the tail end of the event, AMD shared some details of new consumer-driven CPU and GPU products coming out over the next few years.

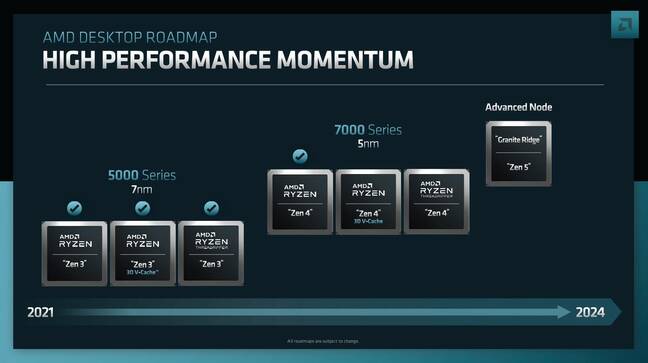

With the Ryzen 7000 desktop chips coming this fall, AMD said consumers can expect two other kinds of desktop CPUs using its 5nm Zen 4 architecture in the future: one that will use the 3D vertical cache technology that debuted with the Ryzen 7 5800X3D earlier this year, and a new generation of the Threadripper CPUs that were, at least for a time, fairly elusive earlier this year.

In 2024, AMD expects to debut desktop chips that will use the Zen 5 architecture on an advanced node with the code name Granite Ridge.

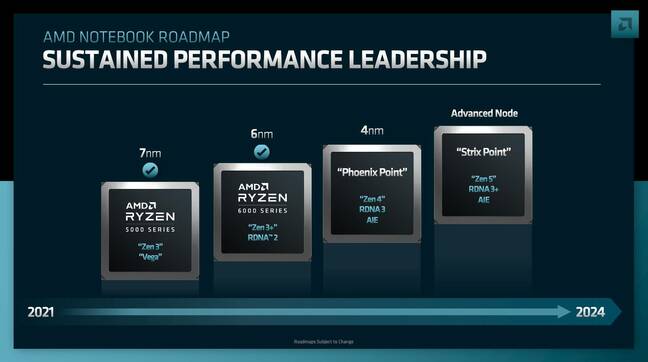

On the laptop side, AMD teased a new generation of Ryzen chips, code-named Phoenix Point, coming in 2023 that will use Zen 4 plus the company’s new RDNA 3 architecture for integrated graphics. The company will follow that in 2024 with the next generation, known as Strix Point, which will use Zen 5 and an improved version of RDNA 3 called RDNA 3+. As mentioned before, both chips will use AI engines from AMD’s XDNA adaptive architecture portfolio.

The newly disclosed RDNA 3 architecture will serve as the basis for future Radeon GPUs, which includes the Navi 3 products coming later this year. AMD said Navi 3 will provide “industry-leading performance-per-watt” as well as “system-level efficiency” and “advanced multimedia capabilities.”

RDNA 3 will combine a chiplet design, a next-generation Infinity Cache and a 5nm process, which AMD said will allow it to provide a greater than 50 percent boost in performance-per-watt compared to the RDNA 2 architecture that is powering the most recent Radeon products. AMD will follow that in 2024 with the RDNA 4 architecture, which will use an advanced node. ®

[ad_2]

Source link