Full-text search your own Mastodon posts with R

Whether you’ve fully migrated from Twitter to Mastodon, are just trying out the “fediverse,” or have been a longtime Mastodon user, you may miss being able to search through the full text of “toots” (also known as posts). In Mastodon, hashtags are searchable but other, non-hashtag text is not. The unavailability of full-text search lets users control how much of their content is easily discoverable by strangers. But what if you want to be able to search your own posts?

Some Mastodon instances allow users to do full-text searches of their own toots but others don’t, depending on the admin. Fortunately, it’s easy to full-text search your own Mastodon posts, thanks to R and the rtoot package developed by David Schoch. That’s what this article is about.

Set up a full-text search

First, install the rtoot package if it’s not already on your system with install.packages("rtoot"). I’ll also be using the dplyr and DT packages. All three can be loaded with the following command:

# install.packages("rtoot") # if needed

library(rtoot)

library(dplyr)

library(DT)

Next, you’ll need your Mastodon ID, which is not the same as your user name and instance. The rtoot package includes a way to search across the fediverse for accounts. That’s a useful tool if you want to see if someone has an account anywhere on Mastodon. But since it also returns account IDs, you can use it to find your own ID, too.

To search for my own ID, I’d use:

accounts <- search_accounts("[email protected]")

That will likely only bring back a dataframe with one result. If you only search for a user name and no instance, such as search_accounts("posit") to see if Posit (formerly RStudio) is active on Mastodon, there could be more results.

My search had only one result, so my ID is the first (as well as only) item in the id column:

my_id <- accounts$id[1]

I can now retrieve my posts with rtoot‘s get_account_statuses() function.

Pull and save your data

The default returns 20 results, at least for now, though the limit appears to be a lot higher if you set it manually with the limit argument. Do be kind about taking advantage of this setting, however, since most Mastodon instances are run by volunteers facing vastly increased hosting costs recently.

The first time you try to pull your own data, you’ll be asked to authenticate. I ran the following to get my most recent 50 posts (note the use of verbose = TRUE to see any messages that might be returned):

smach_statuses <- get_account_statuses(my_id, limit = 50, verbose = TRUE)

Next, I was asked if I wanted to authenticate. After choosing yes, I received the following query:

On which instance do you want to authenticate (e.g., "mastodon.social")?

Next, I was asked:

What type of token do you want?

1: public

2: user

Since I want the authority to see all activity in my own account, I chose user. The package then stored an authentication token for me and I could then run get_account_statuses().

The resulting data frame—which was actually a tibble, a special type of data frame used by tidyverse packages—includes 29 columns. A few are list-columns such as account and media_attachments with non-atomic results, meaning results are not in a strict two-dimensional format.

I suggest saving this result before going further so you don’t need to re-ping the server in case something goes awry with your R session or code. I usually use saveRDS, like so:

saveRDS(smach_statuses, "smach_statuses.Rds")

Trying to save results as a parquet file does not work due to the complex list columns. Using the vroom package to save as a CSV file works and includes the full text of the list columns. However, I’d rather save as a native .Rds or .Rdata file.

Create a searchable table with your results

If all you want is a searchable table for full-text searching, you only need a few of those 29 columns. You will definitely want created_at, url, spoiler_text (if you use content warnings and want those in your table), and content. If you miss seeing engagement metrics on your posts, add reblogs_count, favourites_count, and replies_count.

Below is the code I use to create data for a searchable table for my own viewing. I added a URL column to create a clickable >> with the URL of the post, which I then add to the end of each post’s content. That makes it easy to click through to the original version:

tabledata <- smach_statuses |>

filter(content != "") |>

# filter(visibility == "public") |> # If you want to make this public somewhere. Default includes direct messages.

mutate(

url = paste0("<a target="blank" href="", uri,""><strong> >></strong></a>"),

content = paste(content, url),

created_at := as.character(as.POSIXct(created_at, format = "%Y-%m-%d %H:%M UTC"))

) |>

select(CreatedAt = created_at, Post = content, Replies = replies_count, Favorites = favourites_count, Boosts = reblogs_count)

If I were sharing this table publicly, I’d make sure to uncomment filter(visibility == "public") so only my public posts were available. The data returned by get_account_statuses() for your own account includes posts that are unlisted (available to anyone who finds them but not on public timelines by default) as well as those that are set for followers only or direct messages.

There are a lot of ways to turn this data into a searchable table. One way is with the DT package. The code below creates an interactive HTML table with search filter boxes that can use regular expressions. (See Do more with R: Quick interactive HTML tables to learn more about using DT.)

DT::datatable(tabledata, filter="top", escape = FALSE, rownames = FALSE,

options = list(

search = list(regex = TRUE, caseInsensitive = TRUE),

pageLength = 20,

lengthMenu = c(25, 50, 100),

autowidth = TRUE,

columnDefs = list(list(width="80%", targets = list(2)))

))

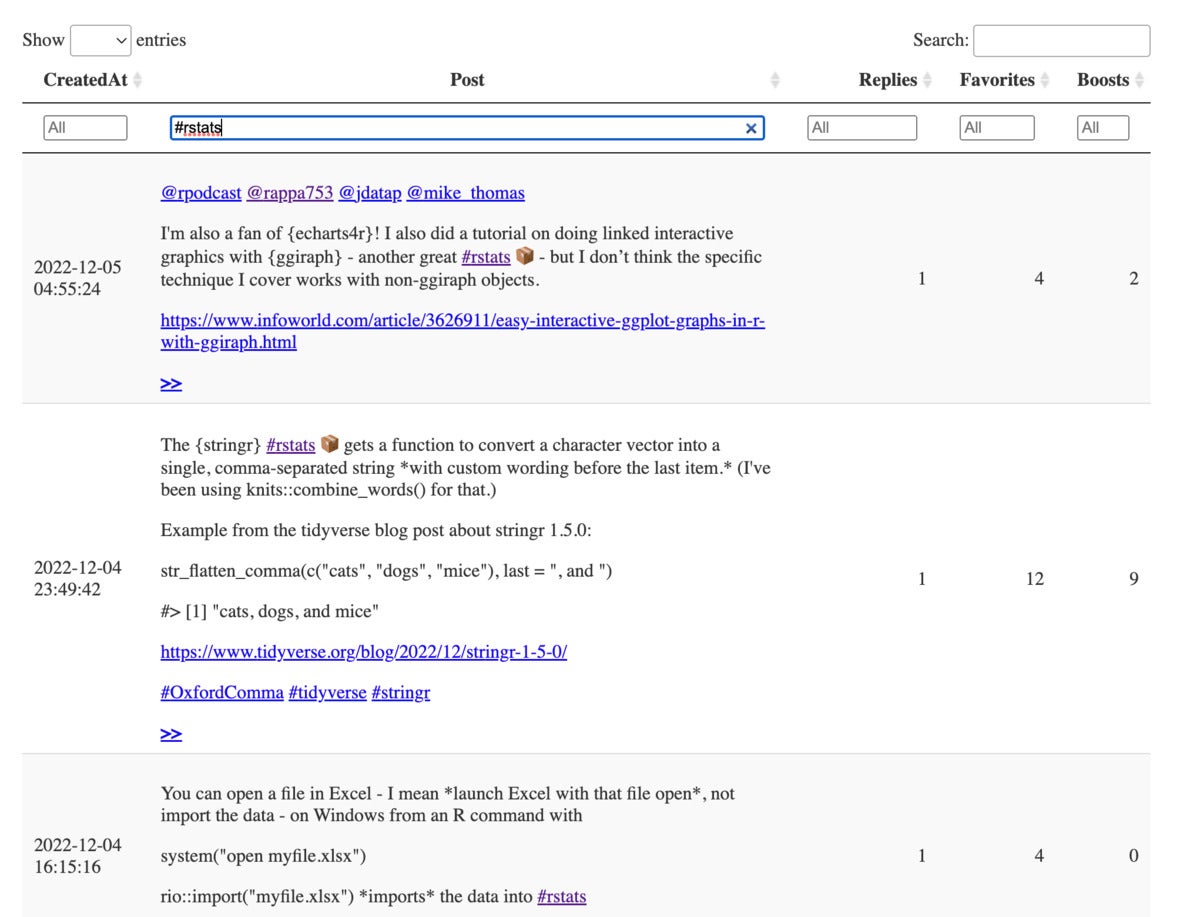

Here’s a screenshot of the resulting table:

Sharon Machlis

Sharon MachlisAn interactive table of my Mastodon posts. This table was created with the DT R package using rtoot.

How to pull in new Mastodon posts

It’s easy to update your data to pull new posts, because the get_account_statuses() function includes a since_id argument. To start, find the maximum ID from the existing data:

max_id <- max(smach_statuses$id)

Next, seek an update with all the posts since the max_id:

new_statuses <- get_account_statuses(my_id, since_id = max_id,

limit = 10, verbose = TRUE)

all_statuses <- bind_rows(new_statuses, smach_statuses)

If you want to see updated engagement metrics for some recent posts in existing data, I’d suggest getting the last 10 or 20 overall posts instead of using since_id. You can then combine that with the existing data and dedupe by keeping the first item. Here is one way to do that:

new_statuses <- get_account_statuses(my_id, limit = 25, verbose = TRUE)

all_statuses <- bind_rows(new_statuses, smach_statuses) |>

distinct(id, .keep_all = TRUE)

How to read your downloaded Mastodon archive

There is another way to get all your posts, which is particularly useful if you’ve been on Mastodon for some time and have a lot of activity over that period. You can download your Mastodon archive from the website.

In the Mastodon web interface, click the little gear icon above the left column for Settings, then Import and export > Data export. You should see an option to download an archive of your posts and media. You can only request an archive once every seven days, though, and it will not include any engagement metrics.

Once you download the archive, you can unpack it manually or, as I prefer, use the archive package (available on CRAN) to extract the files. I’ll also load the jsonlite, stringr, and tidyr packages before extracting files from the archive:

library(archive)

library(jsonlite)

library(stringr)

library(tidyr)

archive_extract("name-of-your-archive-file.tar.gz")

Next, you’ll want to look at outbox.json‘s orderItems. Here’s how I imported that into R:

my_outbox <- fromJSON("outbox.json")[["orderedItems"]]

my_posts <- my_outbox |>

unnest_wider(object, names_sep = "_")

From there, I created a data set for a searchable table similar to the one from the rtoot results. This archive includes all activity, such as favoriting another post, which is why I’m filtering both for type Create and to make sure object_content has a value. As before, I add a >> clickable URL to the post content and tweak how dates are displayed:

search_table_data <- my_posts |>

filter(type == "Create") |>

filter(!is.na(object_content)) |>

mutate(

url = paste0("<a target="blank" href="", object_url,""><strong> >></strong></a>")

) |>

rename(CreatedAt = published, Post = object_content) |>

mutate(CreatedAt = str_replace_all(CreatedAt, "T", " "),

CreatedAt = str_replace_all(CreatedAt, "Z", " "),

Post = str_replace(Post, "<\\/p>$", " "),

Post = paste0(Post, " ", url, "</p>")

) |>

select(CreatedAt, Post) |>

arrange(desc(CreatedAt))

Then, it’s another easy single function to make a searchable table with DT:

datatable(search_table_data, rownames = FALSE, escape = FALSE,

filter="top", options = list(search = list(regex = TRUE)))

This is handy for your own use, but I wouldn’t use archive results to share publicly, since it’s less obvious which of these might have been private messages (you’d need to do some filtering on the to column).

If you have any questions or comments about this article, you can find me on Mastodon at [email protected] as well as occasionally still on Twitter at @sharon000 (although I’m not sure for how much longer). I’m also on LinkedIn.

For more R tips, head to InfoWorld’s Do More With R page.

Copyright © 2022 IDG Communications, Inc.